Enterprise Intelligent Knowledge Base Solution

Business Scenarios and Pain Points

Pain Points

01

Inaccurate enterprise knowledge search

Enterprise knowledge is usually scattered and stored in various business systems, and mostly exists in different forms such as database, pdf, Word, etc., which cannot accurately capture the user's intention and accurately retrieve it.

Pain Points

02

Tacit knowledge is difficult to acquire

Users need to read a lot of content to find the exact information, can not extract a concise summary or answer from a large number of documents, and the cost of tacit knowledge mining is high.

Pain Points

03

The interactive experience is not good

Based on the lack of deep understanding of natural language queries in traditional search methods, search results tend to lack accuracy and relevance. The query results are presented in the form of a list, and users need to click on the links one by one, which further increases the difficulty of finding information and affects the user experience.

Pain Points

04

High cost of knowledge management

Knowledge acquisition and management processes often require manual maintenance and updating processes that consume time and human resources, increasing management operational costs.

Enterprise Intelligent Knowledge Base Solution

Accurate information retrieval

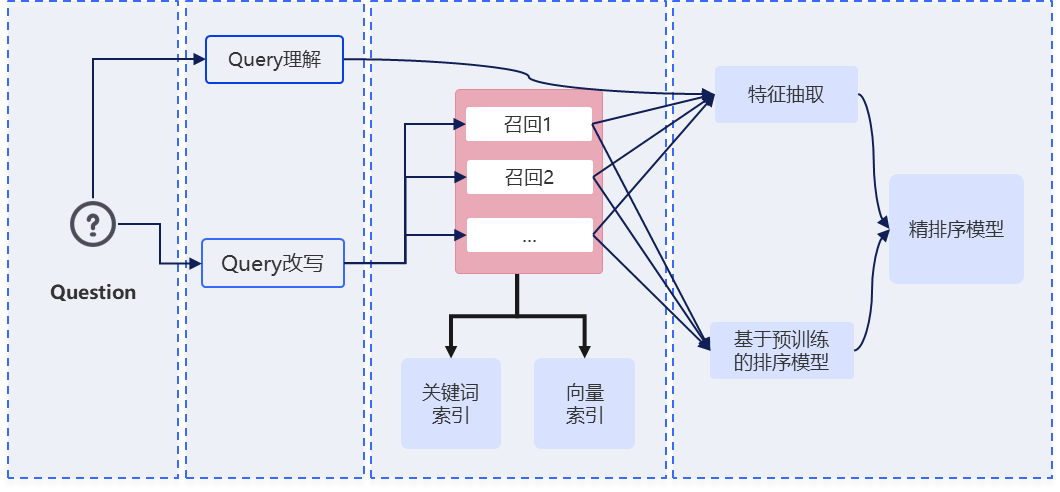

LLM+RAG technology is used to comprehensively improve the user's trust in search results to provide more accurate search results. At the same time, self-developed autonomous rejection and hallucination detection modules ensure that the answer information is true, accurate and based on actual facts. Through the fine sorting calculation of the retrieved results, more accurate answer output is realized.

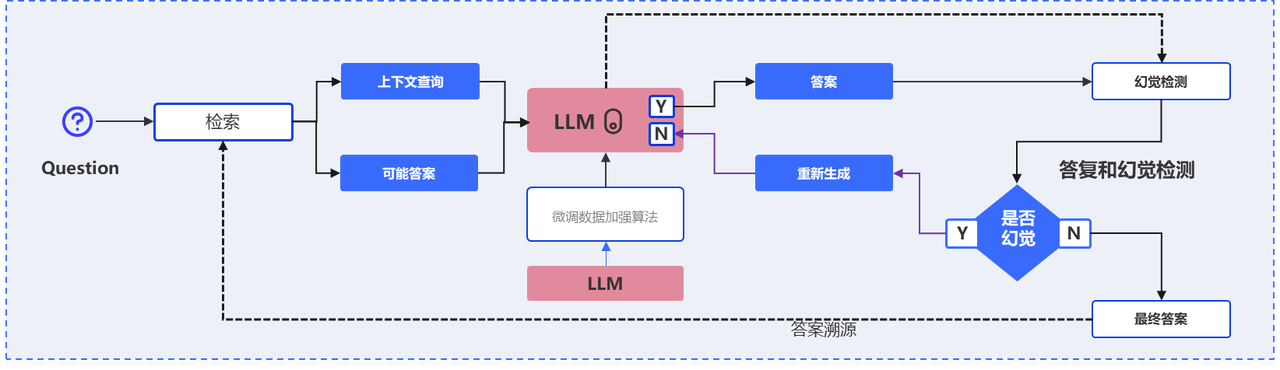

Explore knowledge in depth

Through interactive direct questions and answers in natural language, multiple rounds of dialogue can be conducted according to the contextual context, thus enabling in-depth exploration of complex issues. Have the ability to distill and summarize information in this process, and be able to trace the source of the answer.

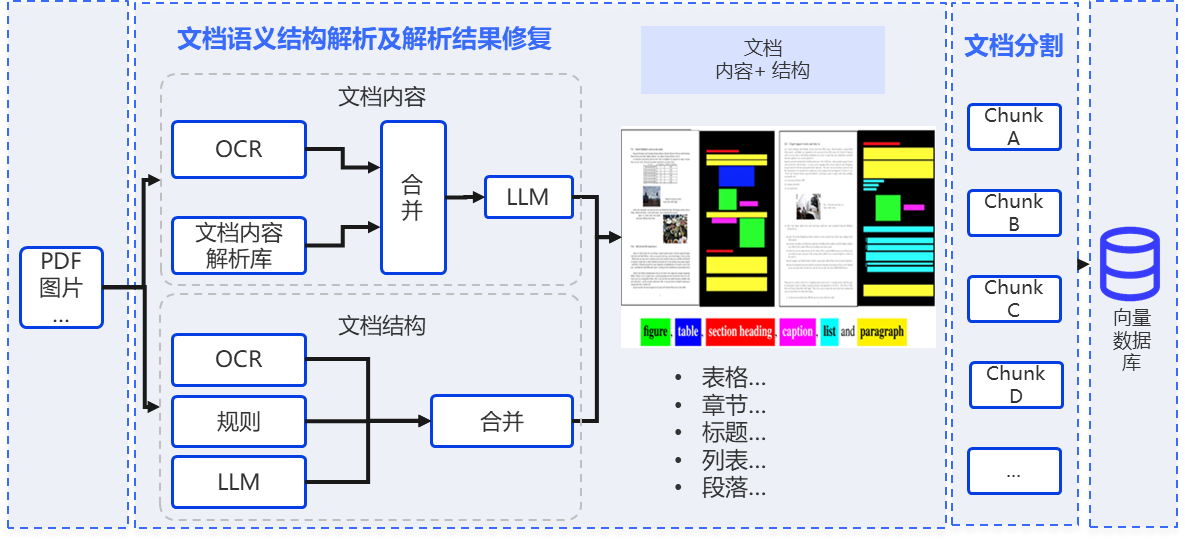

Full-stack convenient data processing

The system supports users to upload documents with one click, and can complete knowledge construction automatically. Through the self-developed multi-grained hierarchy cutting technology, the system can intelligently and automatically divide the document. In addition, the system also provides document semantic structure analysis, including OCR support, to realize the intelligent processing of documents.

Business Value

A more user-friendly interaction experience

Through friendly natural language interaction, realize search, question and answer, knowledge induction, recommendation, etc., help users accurately obtain effective information, save a lot of document retrieval costs, improve work and learning efficiency.

Improve the level of knowledge-based decision-making in enterprises

Intelligently complete knowledge induction, refining and summary, promote the explicitness of tacit knowledge, give full play to the value of knowledge, and empower organizations to be more intelligent and efficient.

Reduce the cost of building an LLM knowledge base

Loosely coupled enterprise knowledge base is built based on LLM+full stack retrieval enhancement system to realize optimal solution in terms of efficiency, accuracy and deployment cost.

Related Products

Mengzi Models

Langboat's in-house developed large language model, capable of handling multilingual, multimodal data, and supporting various text understanding and text generation tasks. It can rapidly meet the requirements of different domains and application scenarios.

Langboat Smart Knowledge Base

Provide intelligent AI search, AI-assisted writing, and other functions to help enterprises rapidly build their own secure and reliable knowledge mid-platform.

Products

Business Cooperation Email

Address

Floor 16, Fangzheng International Building, No. 52 Beisihuan West Road, Haidian District, Beijing, China.

© 2023, Langboat Co., Limited. All rights reserved.

Large Model Registration Code:Beijing-MengZiGPT-20231205

Business Cooperation:

bd@langboat.com

Address:

Floor 16, Fangzheng International Building, No. 52 Beisihuan West Road, Haidian District, Beijing, China.

Official Accounts:

© 2023, Langboat Co., Limited. All rights reserved.

Large Model Registration Code:Beijing-MengZiGPT-20231205